Bloomberg’s Bruno Dupire on the importance of understanding how machines learn

April 21, 2017

From startups to hedge fund giants, the finance industry is rapidly pushing beyond the large statistical models the quantitative community used for years, creating new artificial intelligence systems that analyze massive amounts of data and improve themselves without human intervention.

Yet, for all this innovation, it’s still common to view these smart, autonomous systems as black boxes. The tendency is to throw a lot of information into the system and hope that something good will come out of them. But, in order to realize the full potential of machine learning in finance, it’s critical in these early days to understand what the system is doing, how it’s learning, and what data it’s using.

It’s this theme – understanding what machines understand – that Bruno Dupire, renowned quant finance researcher and head of Quantitative Research at Bloomberg, explored at the sold-out “Machine Learning in Finance Workshop 2017” at The Data Science Institute at Columbia University earlier today.

“There is an enormous interest in big data, analytics and statistics and their application in finance,” says Professor Martin Haugh, co-director of Columbia’s Center for Financial Engineering.

Dupire highlighted a range of examples of how machine learning can be used in the financial market, including for predicting defaults, classifying dividends, analyzing market sentiment, and pricing illiquid assets. Many of today’s time series forecasting strategies come back to mean reversion linked to behavioral attitudes of traders. For example, when traders figured out that daily volatility is commonly higher than weekly volatility, they created statistical arbitrage strategies to exploit that mean reversion. By comparison, with machine learning, you can take advantage of more non-linear methods of analyzing data to develop true time series forecasting strategies, he explains.

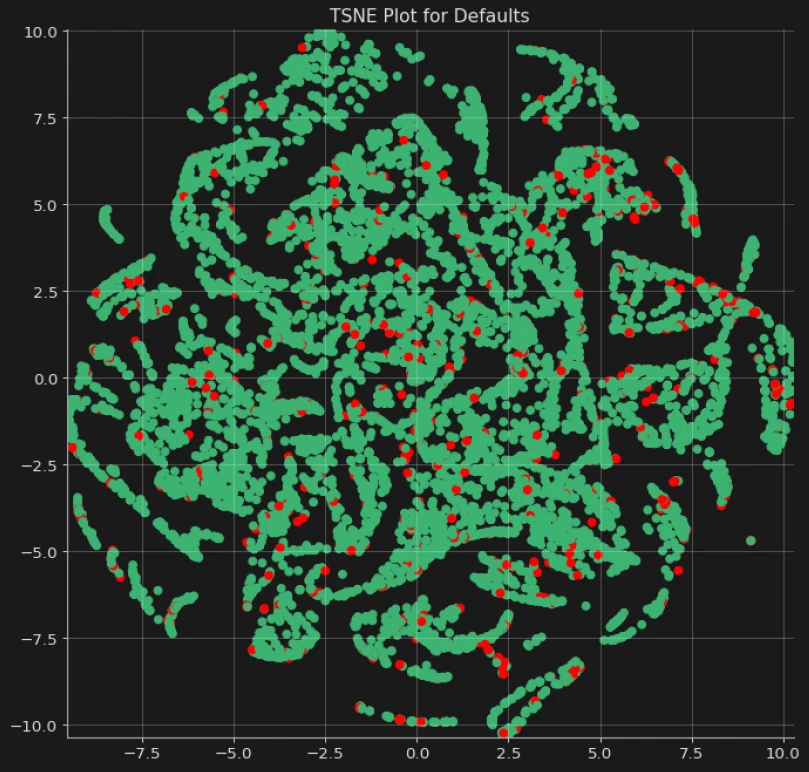

For instance, his research team is using classification strategies to predict whether a company will go bankrupt. By injecting different features, or data points, into a neural network – including debt-equity ratio, long term debt, volatility levels, and past examples of bankruptcy – you can try to understand the parameters or features that are linked to and can predict bankruptcy. It’s a typical example of a training task, Dupire explains, where you train the neural network on past examples to establish links. Then you apply the trained system to a collection of stocks in a current situation to develop a scoring index of whether they’re likely to default.

Another example of machine learning that Bloomberg already has in its quant platform is an application called “nearest neighbor” or “déjà vu.” The application uses a clustering approach to give insights into the evolution of stock prices. Using the application, you pick a stock and a time frame of, say, the last two weeks. The application screens the past data and identifies time windows with a similar price pattern – the nearest neighbors or situations in the past that are similar to the current one. The application then analyzes what happened after each of these time windows to see if there is marked behavior. Maybe, for instance, after a sharp drop, the stock tends to bounce back because of behavioral factors like an overreaction.

Using these tools to identify past situations that are similar to the current one, you can then analyze what happened after and form a judgment about how that can be applied to the current situation.

“The trader is doing this in his head with the limited experience he has of a small time stretch and limited market, he’s trying to see how the current situation resonates with past experiences,” explains Dupire. “But we now have ways to do this in a much more automated and broad way.”

Just as critical as the applications themselves is an understanding of what the machine is doing and why. Bloomberg is developing tools to help better analyze the connections being created within the neural net, its learning process and the status of the data.

“These kinds of tools are quite useful to gaining a better intuition, to know what you need to suppress, simplify or add,” says Dupire. “Not many people are doing this.”

For example, some of Bloomberg’s tools allow you to visualize the pattern of the different connections within a neural network and track the level of activity at different neurons. In a network, the neurons connect to each other, transforming the sum of the inputs they’ve been provided with and influencing each other. Much like brain imaging that pinpoints how different activities – whether looking at a picture or playing music – light up different parts of the brain, Bloomberg’s visualization tools allow you to track how much the example activates the different neurons until they’ve produced an output.

For Dupire, Bloomberg’s work around machine learning is a return to his first passion – artificial intelligence. Before he dove into developing pioneering financial statistical models at companies including Credit Suisse and Paribas, Dupire was involved in the early days of applying AI to finance. He developed a study in the late 1980s for applying neural networks to time series forecasting, the finance community’s first attempt, which he sold to the French government’s investment arm.

Dupire has overseen all of Bloomberg’s quantitative research activity for the past seven years. Now, half of his team is focused on AI. “Over the past few years, my team has been full speed in the field. It’s impressive everything that’s going on.”